Appreciate the remarkable classic beauty of transformer vs autoencoder: decoding machine learning techniques – be through hundreds of timeless images. celebrating the traditional aspects of computer, digital, and electronic. perfect for heritage and cultural projects. The transformer vs autoencoder: decoding machine learning techniques – be collection maintains consistent quality standards across all images. Suitable for various applications including web design, social media, personal projects, and digital content creation All transformer vs autoencoder: decoding machine learning techniques – be images are available in high resolution with professional-grade quality, optimized for both digital and print applications, and include comprehensive metadata for easy organization and usage. Discover the perfect transformer vs autoencoder: decoding machine learning techniques – be images to enhance your visual communication needs. Diverse style options within the transformer vs autoencoder: decoding machine learning techniques – be collection suit various aesthetic preferences. Cost-effective licensing makes professional transformer vs autoencoder: decoding machine learning techniques – be photography accessible to all budgets. Whether for commercial projects or personal use, our transformer vs autoencoder: decoding machine learning techniques – be collection delivers consistent excellence. Regular updates keep the transformer vs autoencoder: decoding machine learning techniques – be collection current with contemporary trends and styles. Comprehensive tagging systems facilitate quick discovery of relevant transformer vs autoencoder: decoding machine learning techniques – be content.

![[번역] TensorFlow의 변형 오토인코더 :: LearnOpenCV 제공 : 네이버 블로그](https://learnopencv.com/wp-content/uploads/2020/11/vae-diagram-1-1024x563.jpg)

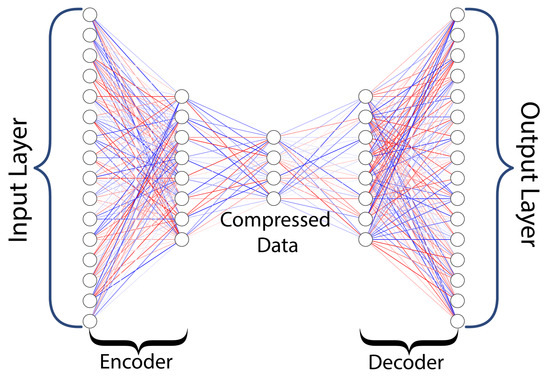

![Autoencoders in Deep Learning: Tutorial & Use Cases [2023]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/627d121bd4fd200d73814c11_60bcd0b7b750bae1a953d61d_autoencoder.png)

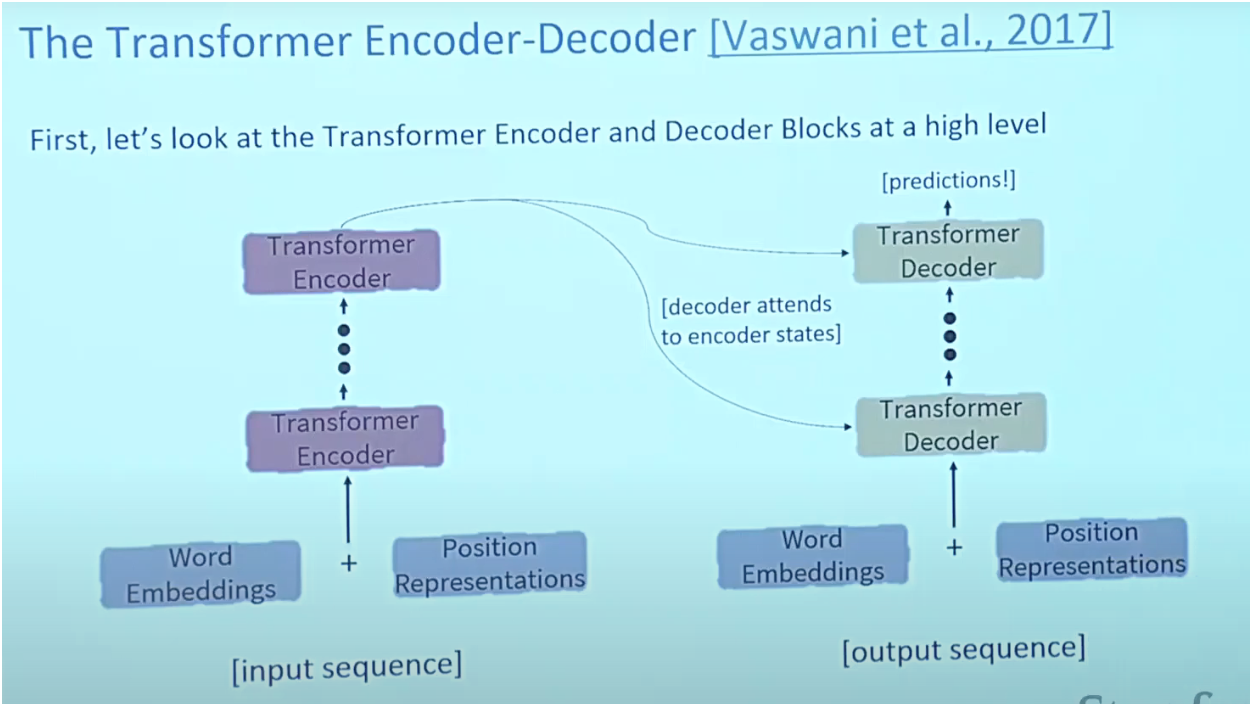

![[D] An ELI5 explanation for LoRA - Low-Rank Adaptation. : r/MachineLearning](https://www.researchgate.net/profile/Md-Arid-Hasan/publication/338223294/figure/fig2/AS:841443144900609@1577627087767/Transformer-Encoder-Decoder-architecture-taken-from-Vaswani-et-al-9-for-illustration.jpg)

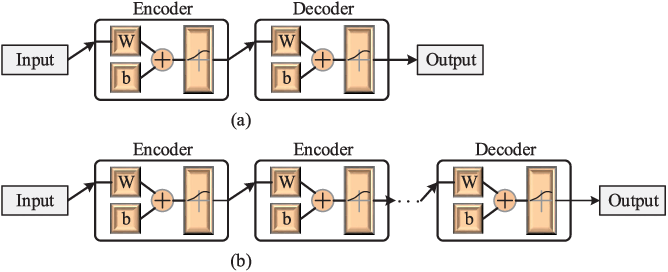

![Fig. 15, [Variations of auto-encoder. (a) The...]. - Machine Learning ...](https://www.ncbi.nlm.nih.gov/books/NBK597467/bin/515045_1_En_13_Fig15_HTML.jpg)