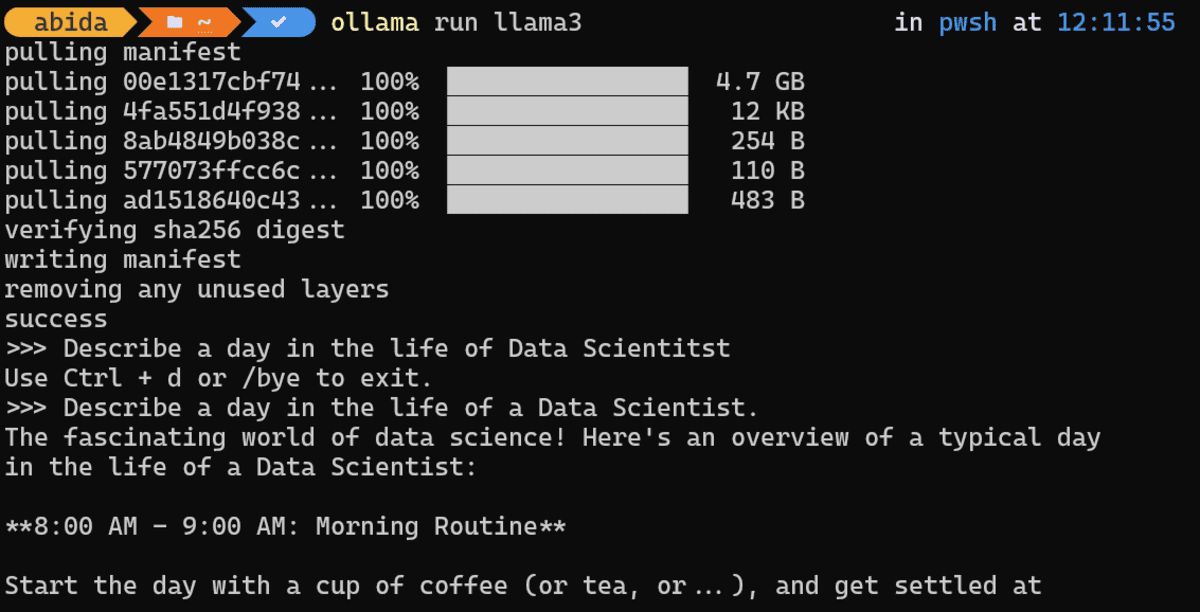

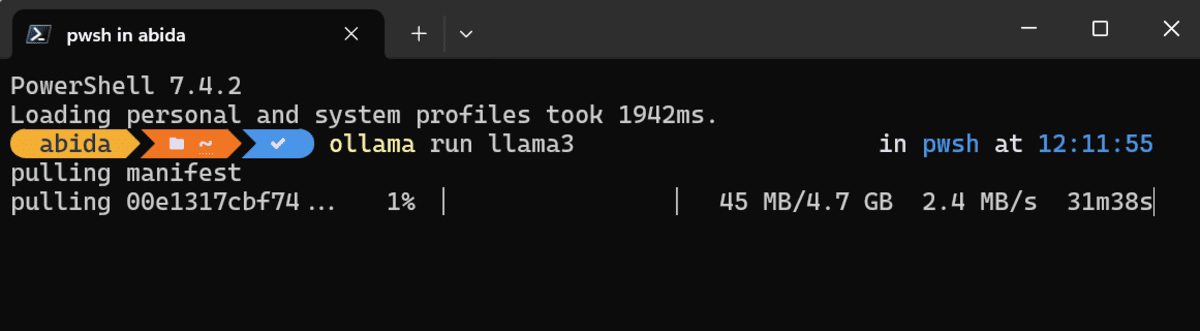

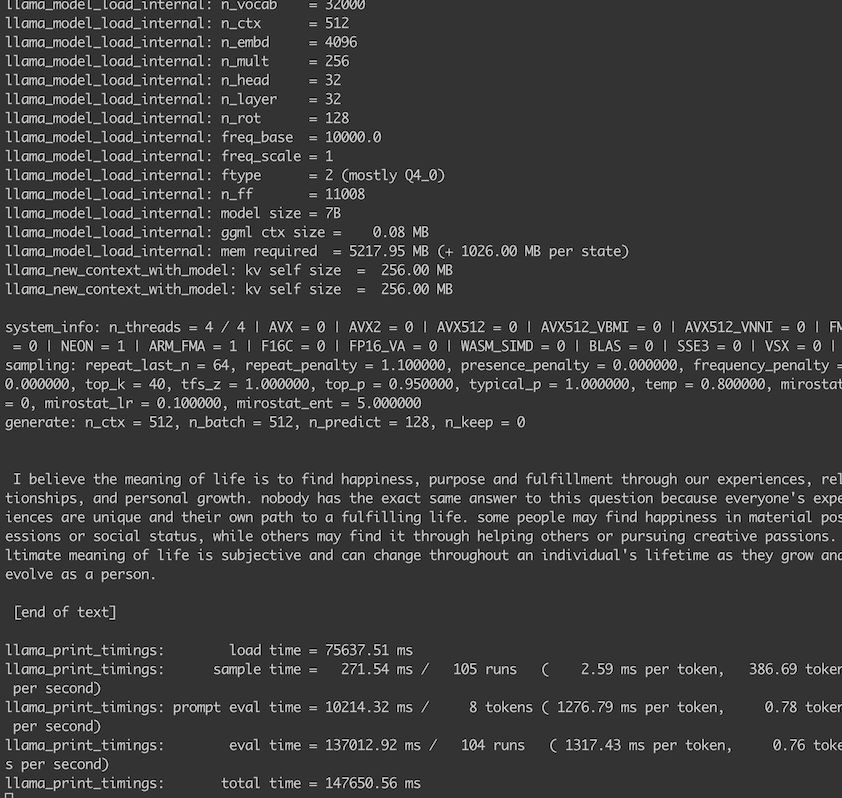

Elevate your brand with our commercial run any llm on distributed multiple gpus locally using llama cpp | by gallery featuring extensive collections of business-ready images. crafted for marketing purposes showcasing photography, images, and pictures. perfect for advertising and marketing campaigns. Our run any llm on distributed multiple gpus locally using llama cpp | by collection features high-quality images with excellent detail and clarity. Suitable for various applications including web design, social media, personal projects, and digital content creation All run any llm on distributed multiple gpus locally using llama cpp | by images are available in high resolution with professional-grade quality, optimized for both digital and print applications, and include comprehensive metadata for easy organization and usage. Discover the perfect run any llm on distributed multiple gpus locally using llama cpp | by images to enhance your visual communication needs. Regular updates keep the run any llm on distributed multiple gpus locally using llama cpp | by collection current with contemporary trends and styles. Our run any llm on distributed multiple gpus locally using llama cpp | by database continuously expands with fresh, relevant content from skilled photographers. Whether for commercial projects or personal use, our run any llm on distributed multiple gpus locally using llama cpp | by collection delivers consistent excellence.

![[机器学习]-如何在 MacBook 上安装 LLama.cpp + LLM Model 运行环境](https://file.5bei.cn/2024/09/frc-bfb2e0422f3f2f9cd4825dee342da810.png)