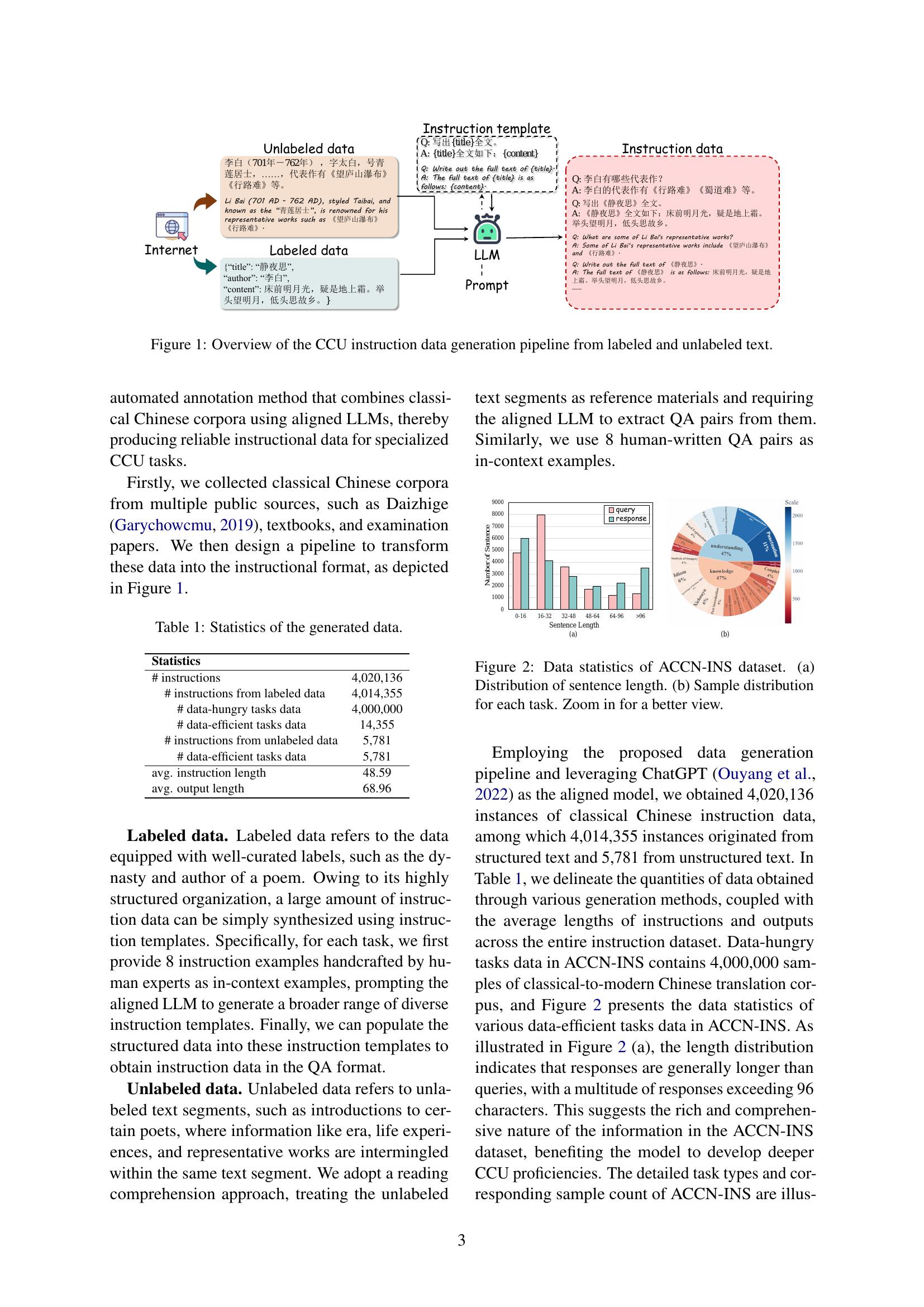

Utilize our extensive mastering large language models – part viii: encoder-decoder resource library containing comprehensive galleries of high-quality images. captured using advanced photographic techniques and professional equipment. supporting commercial, educational, and research applications. Each mastering large language models – part viii: encoder-decoder image is carefully selected for superior visual impact and professional quality. Perfect for marketing materials, corporate presentations, advertising campaigns, and professional publications All mastering large language models – part viii: encoder-decoder images are available in high resolution with professional-grade quality, optimized for both digital and print applications, and include comprehensive metadata for easy organization and usage. Professional photographers and designers trust our mastering large language models – part viii: encoder-decoder images for their consistent quality and technical excellence. Multiple resolution options ensure optimal performance across different platforms and applications. Each image in our mastering large language models – part viii: encoder-decoder gallery undergoes rigorous quality assessment before inclusion. Instant download capabilities enable immediate access to chosen mastering large language models – part viii: encoder-decoder images. Time-saving browsing features help users locate ideal mastering large language models – part viii: encoder-decoder images quickly. The mastering large language models – part viii: encoder-decoder archive serves professionals, educators, and creatives across diverse industries. Our mastering large language models – part viii: encoder-decoder database continuously expands with fresh, relevant content from skilled photographers.

![[PDF] Studying Large Language Model Generalization with Influence ...](https://d3i71xaburhd42.cloudfront.net/04a96b66705858c988edfcb73191c1da7d54abfb/90-Table3-1.png)

![[PDF] Evaluating Large Language Models Trained on Code | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/acbdbf49f9bc3f151b93d9ca9a06009f4f6eb269/10-Table3-1.png)

![[PDF] A Comprehensive Overview of Large Language Models | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca31b8584b6c022ef15ddfe994fe361e002b7729/23-TableVII-1.png)

![[PDF] Instruction-Following Evaluation for Large Language Models ...](https://d3i71xaburhd42.cloudfront.net/1a9b8c545ba9a6779f202e04639c2d67e6d34f63/2-Figure1-1.png)

![[PDF] Evaluating Large Language Models Trained on Code | Semantic Scholar](https://figures.semanticscholar.org/acbdbf49f9bc3f151b93d9ca9a06009f4f6eb269/500px/2-Figure1-1.png)

![[PDF] Evaluating Large Language Models Trained on Code | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/acbdbf49f9bc3f151b93d9ca9a06009f4f6eb269/8-Table2-1.png)

![[PDF] Large Language Models Encode Clinical Knowledge | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6052486bc9144dc1730c12bf35323af3792a1fd0/5-Table1-1.png)

%20through%20the%20angle%20of%20time%20directionality%2C%20addressing%20a%20question%20first%20raised%20in%20(Shannon%2C%201951).%20For%20large%20enough%20models%2C%20we%20empirically%20find%20a%20time%20asymmetry%20in%20their%20ability%20to%20learn%20natural%20language:%20a%20difference%20in%20the%20average%20log-perplexity%20when%20trying%20to%20predict%20the%20next%20token%20versus%20when%20trying%20to%20predict%20the%20previous%20one.%20This%20difference%20is%20at%20the%20same%20time%20subtle%20and%20very%20consistent%20across%20various%20modalities%20(language%2C%20model%20size%2C%20training%20time%2C%20...).%20Theoretically%2C%20this%20is%20surprising:%20from%20an%20information-theoretic%20point%20of%20view%2C%20there%20should%20be%20no%20such%20difference.%20We%20provide%20a%20theoretical%20framework%20to%20explain%20how%20such%20an%20asymmetry%20can%20appear%20from%20sparsity%20and%20computational%20complexity%20considerations%2C%20and%20outline%20a%20number%20of%20perspectives%20opened%20by%20our%20results.)

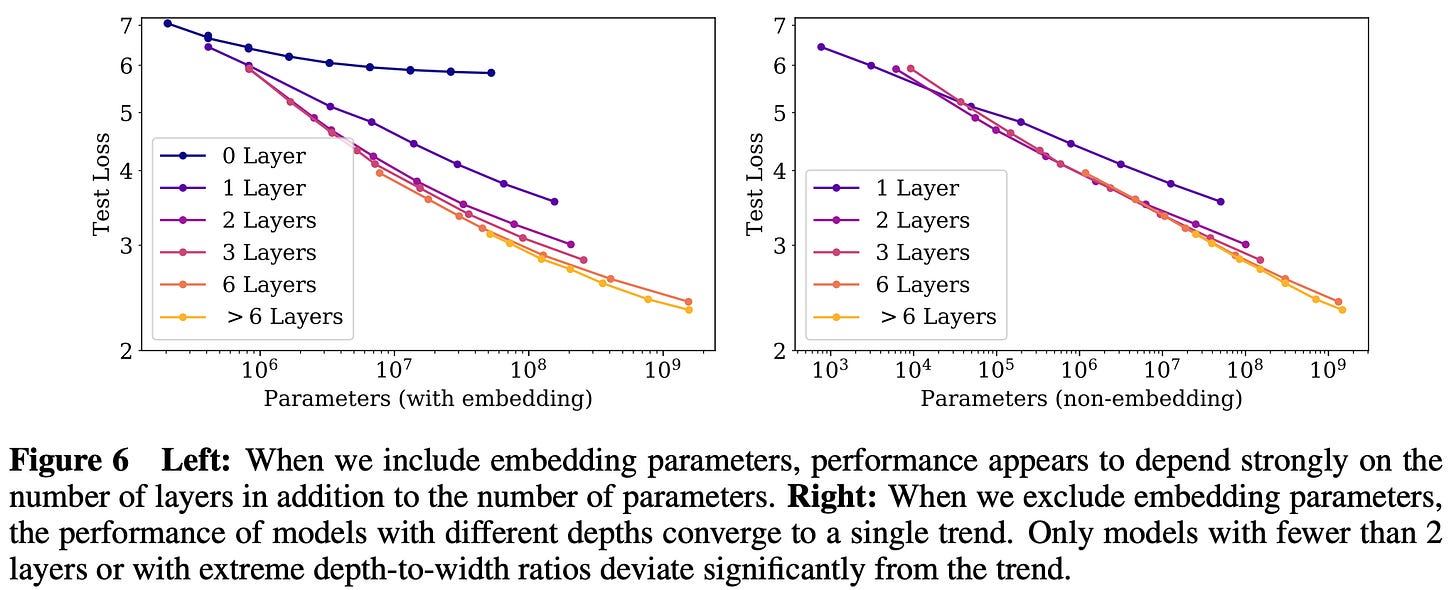

![[PDF] Evaluating Large Language Models Trained on Code | Semantic Scholar](https://figures.semanticscholar.org/acbdbf49f9bc3f151b93d9ca9a06009f4f6eb269/500px/5-Figure6-1.png)

![[PDF] Large Language Models Encode Clinical Knowledge | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6052486bc9144dc1730c12bf35323af3792a1fd0/34-TableA.6-1.png)