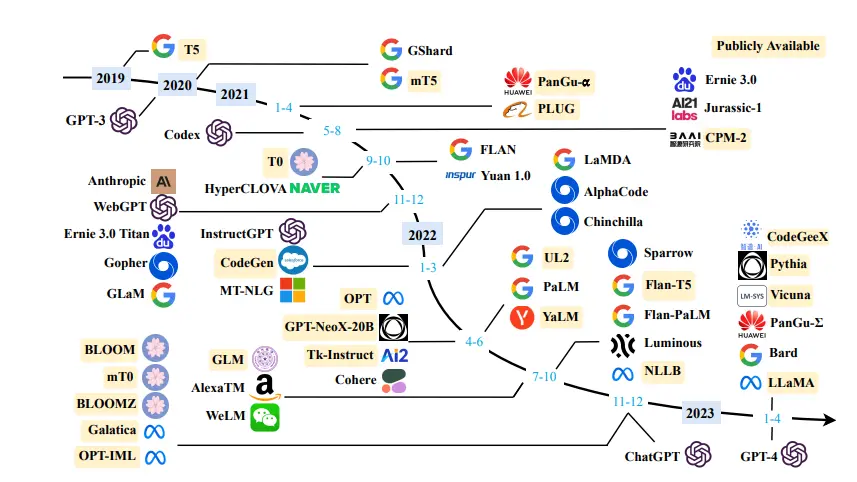

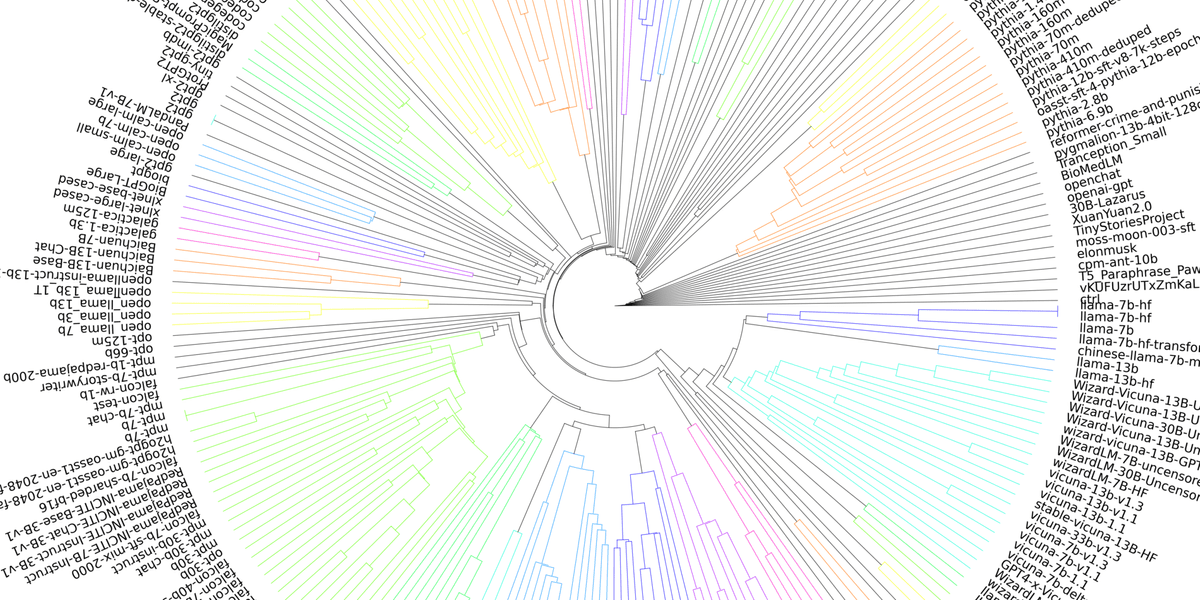

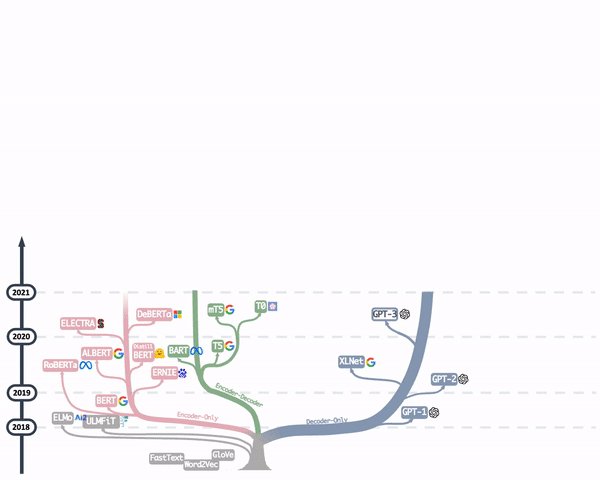

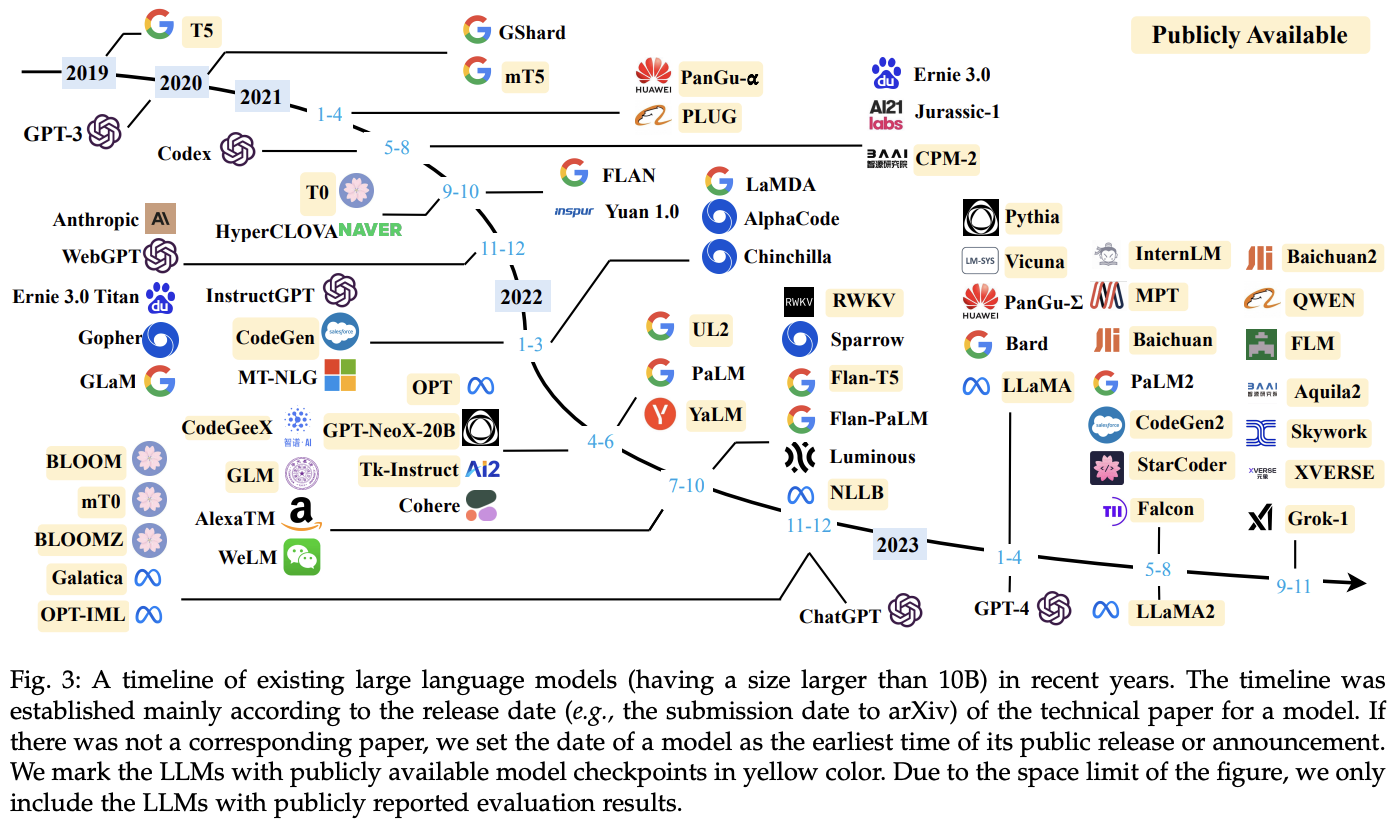

Evolution Tree Of Large Language Models Encoder And Decorder

Enhance your business with our stunning commercial Evolution Tree Of Large Language Models Encoder And Decorder collection of numerous professional images. designed for business applications featuring flower, plant, and animal. ideal for corporate communications and branding. Each Evolution Tree Of Large Language Models Encoder And Decorder image is carefully selected for superior visual impact and professional quality. Suitable for various applications including web design, social media, personal projects, and digital content creation All Evolution Tree Of Large Language Models Encoder And Decorder images are available in high resolution with professional-grade quality, optimized for both digital and print applications, and include comprehensive metadata for easy organization and usage. Explore the versatility of our Evolution Tree Of Large Language Models Encoder And Decorder collection for various creative and professional projects. Each image in our Evolution Tree Of Large Language Models Encoder And Decorder gallery undergoes rigorous quality assessment before inclusion. Our Evolution Tree Of Large Language Models Encoder And Decorder database continuously expands with fresh, relevant content from skilled photographers. Diverse style options within the Evolution Tree Of Large Language Models Encoder And Decorder collection suit various aesthetic preferences. Regular updates keep the Evolution Tree Of Large Language Models Encoder And Decorder collection current with contemporary trends and styles.

![Evolution of Neural Networks to Language Models [Updated]](https://cdn.labellerr.com/Enhancing%20Home%20Service%20with%20Object%20Recognition/Language%20Models/0_bM9oRET5AGEdpaRv.webp)

![Evolution of Neural Networks to Language Models [Updated]](https://cdn.labellerr.com/Enhancing%20Home%20Service%20with%20Object%20Recognition/Language%20Models/Screenshot%202023-05-05%20141241.webp)

![[2402.02315] A Survey of Large Language Models in Finance (FinLLMs)](https://ar5iv.labs.arxiv.org/html/2402.02315/assets/picture2.png)

![Evolution of Neural Networks to Language Models [Updated]](https://www.labellerr.com/blog/content/images/size/w2000/2023/05/large-language-model-logical-reasoning.webp)

![Gray and Atkinson (Fig. 1 in [6]) IE language evolutionary tree for 14 ...](https://mavink.com/images/loadingwhitetransparent.gif)

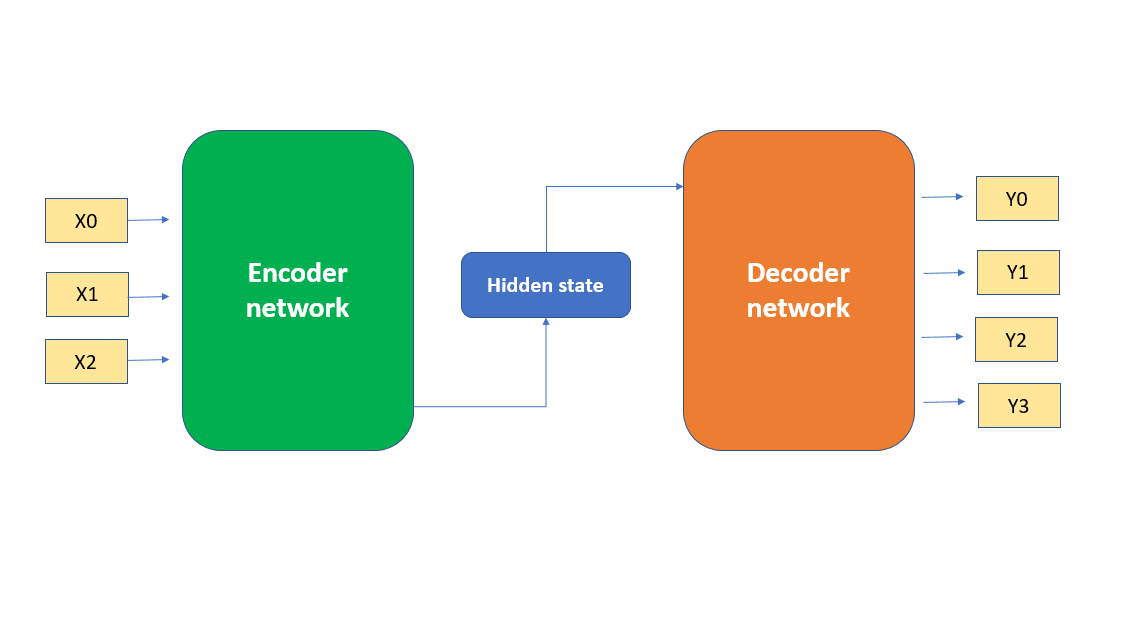

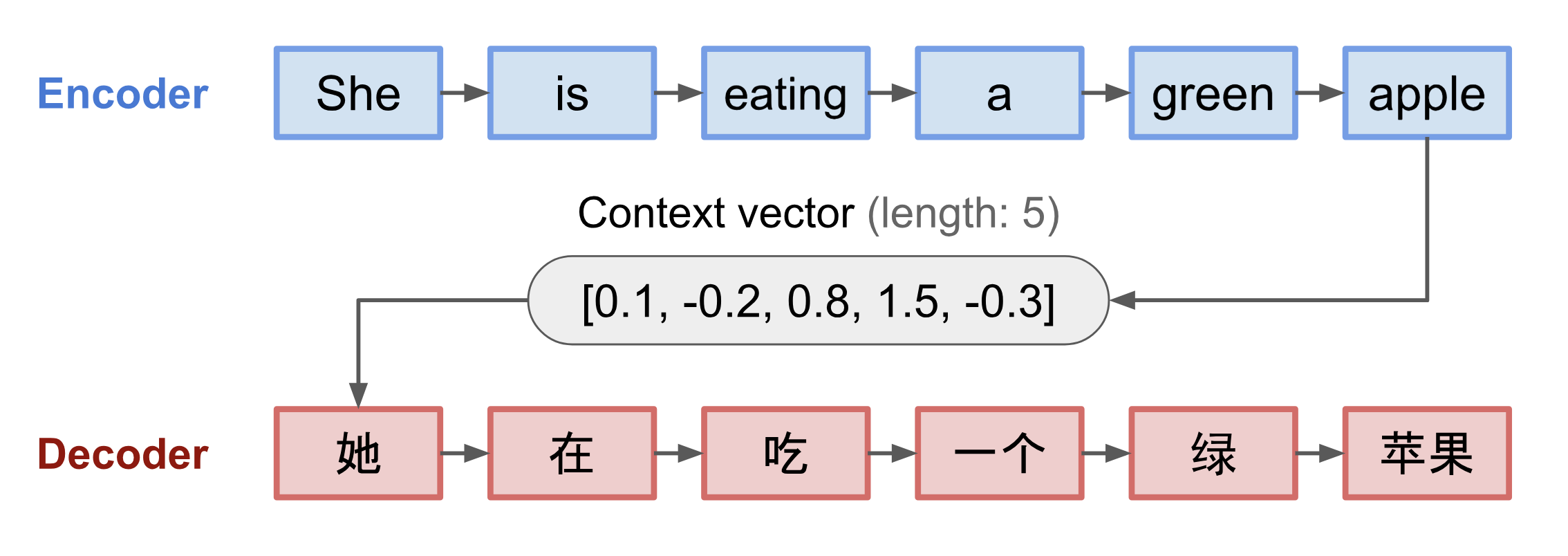

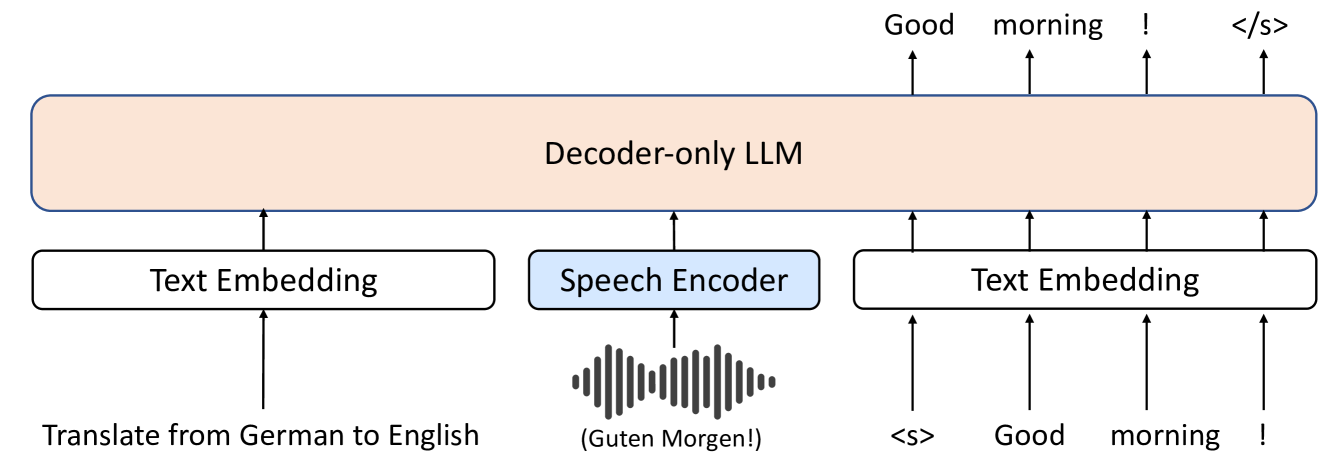

%2C%20known%20for%20their%20exceptional%20reasoning%20capabilities%2C%20generalizability%2C%20and%20fluency%20across%20diverse%20domains%2C%20present%20a%20promising%20avenue%20for%20enhancing%20speech-related%20tasks.%20In%20this%20paper%2C%20we%20focus%20on%20integrating%20decoder-only%20LLMs%20to%20the%20task%20of%20speech-to-text%20translation%20(S2TT).%20We%20propose%20a%20decoder-only%20architecture%20that%20enables%20the%20LLM%20to%20directly%20consume%20the%20encoded%20speech%20representation%20and%20generate%20the%20text%20translation.%20Additionally%2C%20we%20investigate%20the%20effects%20of%20different%20parameter-efficient%20fine-tuning%20techniques%20and%20task%20formulation.%20Our%20model%20achieves%20state-of-the-art%20performance%20on%20CoVoST%202%20and%20FLEURS%20among%20models%20trained%20without%20proprietary%20data.%20We%20also%20conduct%20analyses%20to%20validate%20the%20design%20choices%20of%20our%20proposed%20model%20and%20bring%20insights%20to%20the%20integration%20of%20LLMs%20to%20S2TT.)

.png)

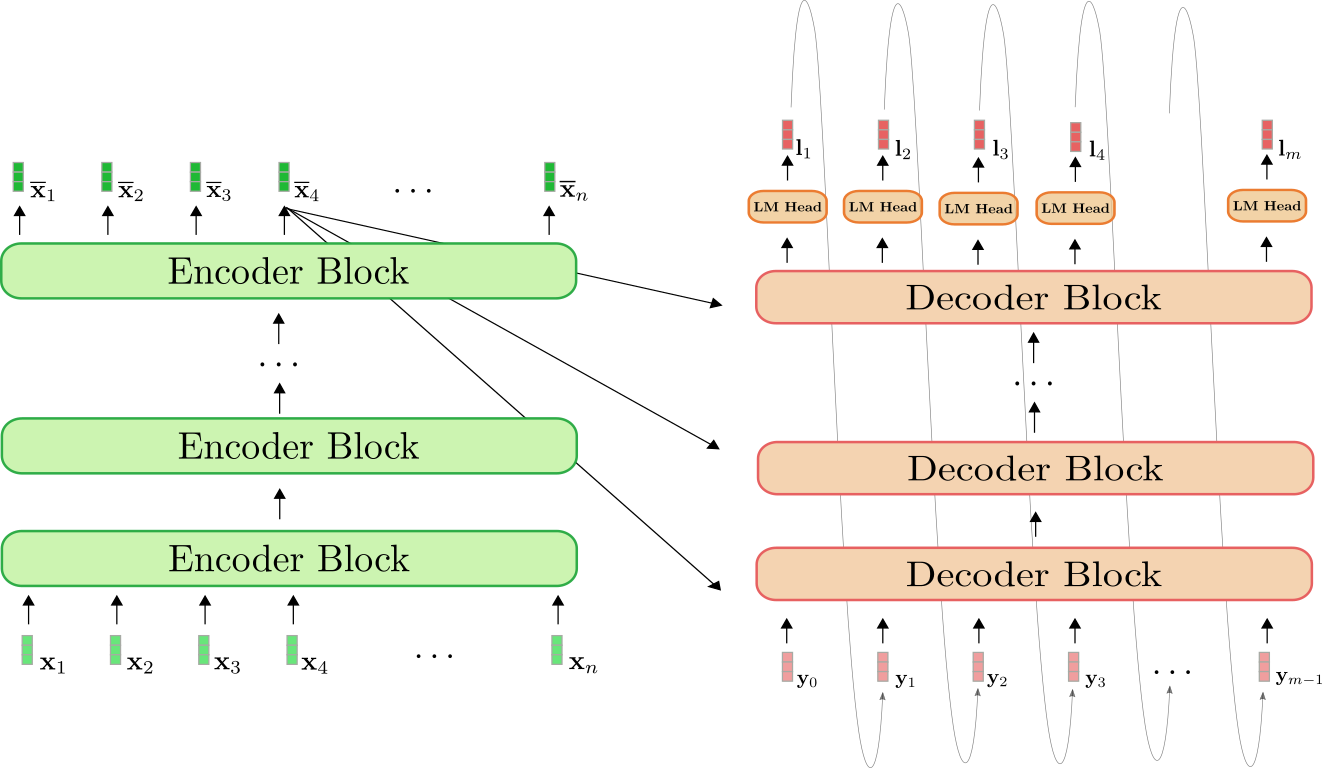

![[2307.08234] Adapting Large Language Model with Speech for Fully ...](https://ar5iv.labs.arxiv.org/html/2307.08234/assets/enc_dec.jpg)

%2C%20there%20has%20been%20a%20shift%20towards%20linearization-based%20methods%2C%20which%20process%20structured%20data%20as%20sequential%20token%20streams%2C%20diverging%20from%20approaches%20that%20explicitly%20model%20structure%2C%20often%20as%20a%20graph.%20Crucially%2C%20there%20remains%20a%20gap%20in%20our%20understanding%20of%20how%20these%20linearization-based%20methods%20handle%20structured%20data%2C%20which%20is%20inherently%20non-linear.%20This%20work%20investigates%20the%20linear%20handling%20of%20structured%20data%20in%20encoder-decoder%20language%20models%2C%20specifically%20T5.%20Our%20findings%20reveal%20the%20model's%20ability%20to%20mimic%20human-designed%20processes%20such%20as%20schema%20linking%20and%20syntax%20prediction%2C%20indicating%20a%20deep%2C%20meaningful%20learning%20of%20structure%20beyond%20simple%20token%20sequencing.%20We%20also%20uncover%20insights%20into%20the%20model's%20internal%20mechanisms%2C%20including%20the%20ego-centric%20nature%20of%20structure%20node%20encodings%20and%20the%20potential%20for%20model%20compression%20due%20to%20modality%20fusion%20redundancy.%20Overall%2C%20this%20work%20sheds%20light%20on%20the%20inner%20workings%20of%20linearization-based%20methods%20and%20could%20potentially%20provide%20guidance%20for%20future%20research.)

![[2304.04052] Decoder-Only or Encoder-Decoder? Interpreting Language ...](https://ar5iv.labs.arxiv.org/html/2304.04052/assets/x1.png)