Encoder/decoder Language

Explore the simplicity of Encoder/decoder Language through comprehensive galleries of elegant photographs. featuring understated examples of photography, images, and pictures. designed to emphasize clarity and focus. Our Encoder/decoder Language collection features high-quality images with excellent detail and clarity. Suitable for various applications including web design, social media, personal projects, and digital content creation All Encoder/decoder Language images are available in high resolution with professional-grade quality, optimized for both digital and print applications, and include comprehensive metadata for easy organization and usage. Discover the perfect Encoder/decoder Language images to enhance your visual communication needs. Cost-effective licensing makes professional Encoder/decoder Language photography accessible to all budgets. Each image in our Encoder/decoder Language gallery undergoes rigorous quality assessment before inclusion. Comprehensive tagging systems facilitate quick discovery of relevant Encoder/decoder Language content. The Encoder/decoder Language collection represents years of careful curation and professional standards. Instant download capabilities enable immediate access to chosen Encoder/decoder Language images. Reliable customer support ensures smooth experience throughout the Encoder/decoder Language selection process. Regular updates keep the Encoder/decoder Language collection current with contemporary trends and styles. Professional licensing options accommodate both commercial and educational usage requirements. Advanced search capabilities make finding the perfect Encoder/decoder Language image effortless and efficient.

![[2304.04052] Decoder-Only or Encoder-Decoder? Interpreting Language ...](https://ar5iv.labs.arxiv.org/html/2304.04052/assets/x1.png)

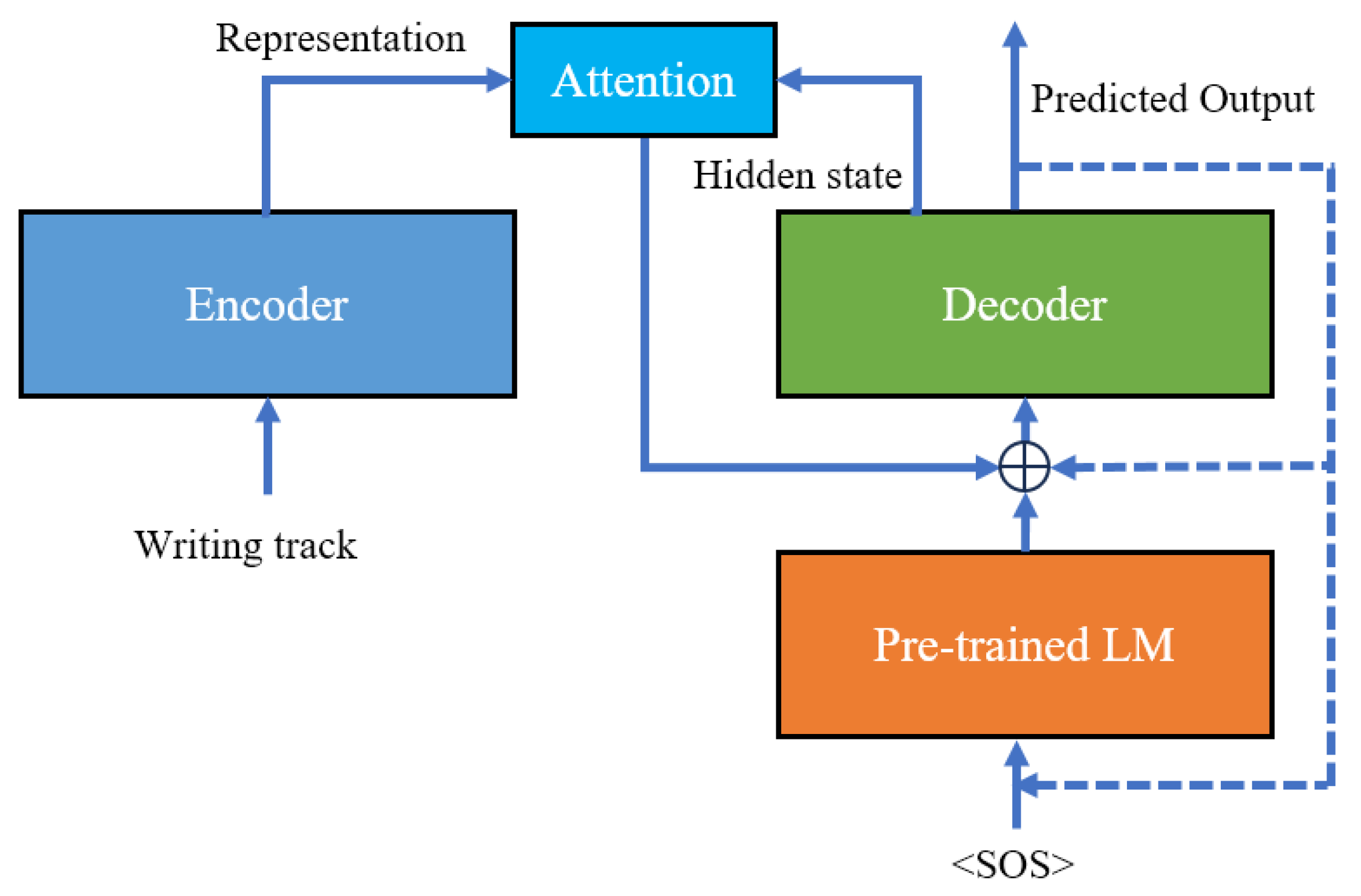

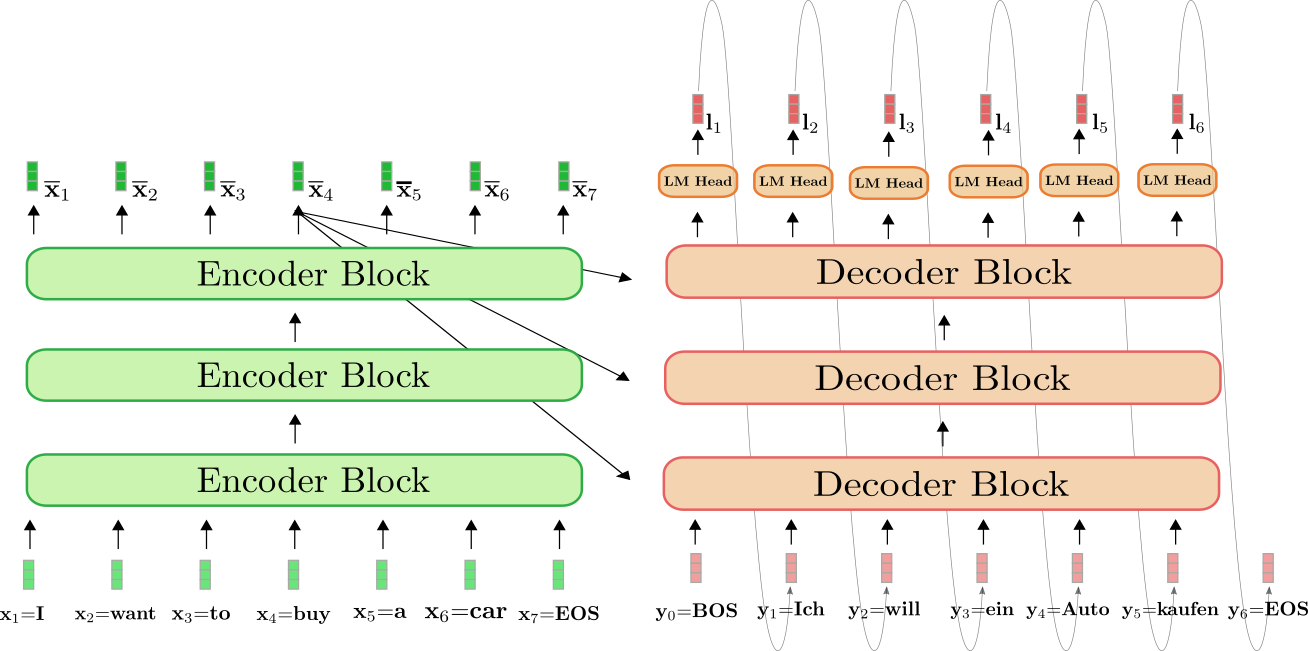

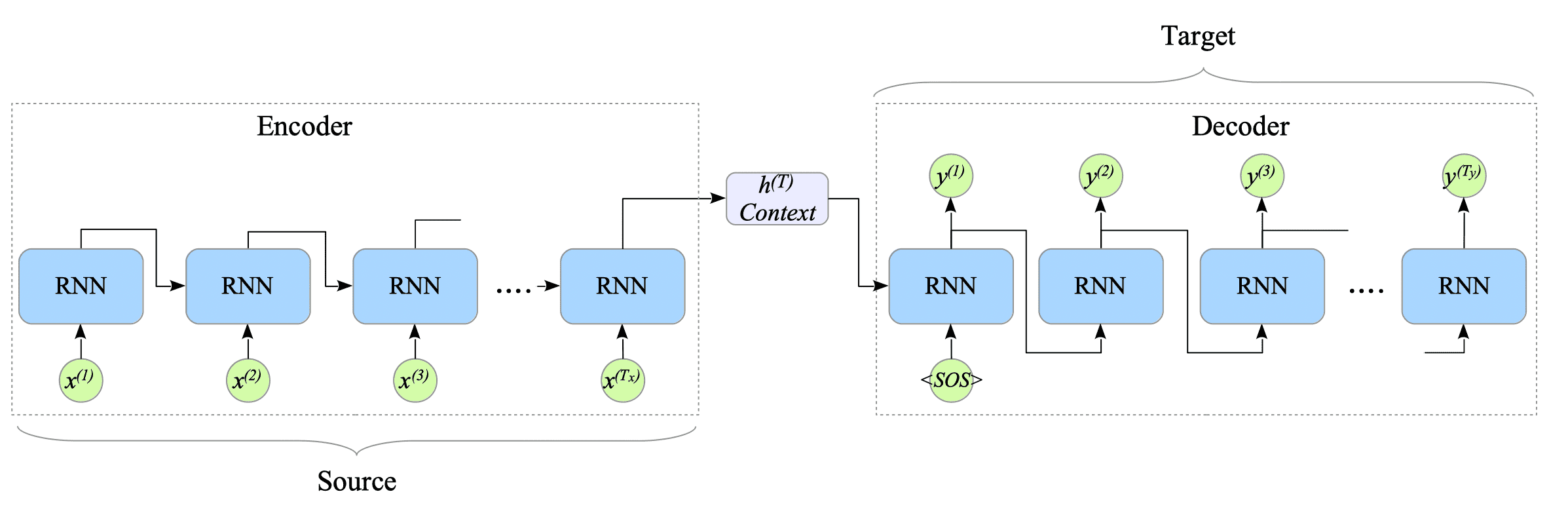

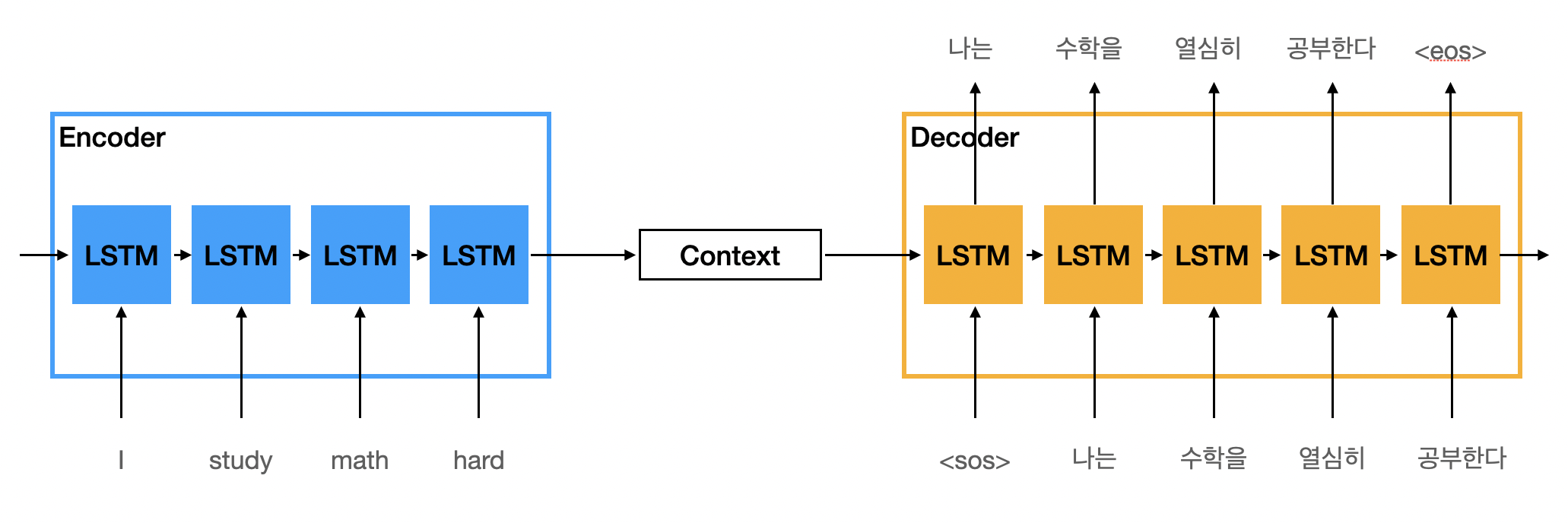

%2C%20there%20has%20been%20a%20shift%20towards%20linearization-based%20methods%2C%20which%20process%20structured%20data%20as%20sequential%20token%20streams%2C%20diverging%20from%20approaches%20that%20explicitly%20model%20structure%2C%20often%20as%20a%20graph.%20Crucially%2C%20there%20remains%20a%20gap%20in%20our%20understanding%20of%20how%20these%20linearization-based%20methods%20handle%20structured%20data%2C%20which%20is%20inherently%20non-linear.%20This%20work%20investigates%20the%20linear%20handling%20of%20structured%20data%20in%20encoder-decoder%20language%20models%2C%20specifically%20T5.%20Our%20findings%20reveal%20the%20model's%20ability%20to%20mimic%20human-designed%20processes%20such%20as%20schema%20linking%20and%20syntax%20prediction%2C%20indicating%20a%20deep%2C%20meaningful%20learning%20of%20structure%20beyond%20simple%20token%20sequencing.%20We%20also%20uncover%20insights%20into%20the%20model's%20internal%20mechanisms%2C%20including%20the%20ego-centric%20nature%20of%20structure%20node%20encodings%20and%20the%20potential%20for%20model%20compression%20due%20to%20modality%20fusion%20redundancy.%20Overall%2C%20this%20work%20sheds%20light%20on%20the%20inner%20workings%20of%20linearization-based%20methods%20and%20could%20potentially%20provide%20guidance%20for%20future%20research.)

![[1711.06061] An Encoder-Decoder Framework Translating Natural Language ...](https://ar5iv.labs.arxiv.org/html/1711.06061/assets/x1.png)